Separation of Duties in Software Solutions: A Case Study in Overcee

Separation (or Segregation) of Duties in business and Information Technology is a great internal process which describes restricting the power of any one individual in order to combat mistakes and fraud. R. A. Botha and J. H. P. Eloff in “Separation of Duties for Access Control Enforcement in Workflow Environments” describe SoD as:

Separation of duty, as a security principle, has as its primary objective the prevention of fraud and errors. This objective is achieved by disseminating the tasks and associated privileges for a specific business process among multiple users. This principle is demonstrated in the traditional example of separation of duty found in the requirement of two signatures on a cheque.

Today we’re looking at this concept, and how it helps keep your Overcee (and subsequently your IT infrastructure) secure, while still delivering the power and performance associated with Overcee.

The exact details of how Overcee processes pending jobs is largely of little importance to our clients, the most important thing for them is that it works when it should. However, understanding the processes going on in the background, and why we implement a Separation of Duties, can really help when planning your Tools and Tool Sequences, and can be critical in understanding the Job Manager and Worker log files if something goes awry.

Separation of Duties in a Software Context

Separation of Duties in a Software Context

While the business concerns itself with reducing fraud and problems from arising by the physical separation of duties, the software engineering team must look at it from a slightly different perspective.

While the problem is very much the same, it can sometimes be harder to visualise a solution. In our example, we’ll be talking about a Windows service, which runs under a particular user account (Local System in this case). Creating a separation of duties in this case necessitates the use of completely different user account for running Tools (more on that later).

The options we considered when approaching this problem were:

Impersonation

The process of using local or network resources by impersonating the network identity of another user. This requires the credentials of the user to be available during runtime, meaning they need to be stored as securely as two-way encryption can offer (typically bad practice).

Spawning a New Process

Launching a dedicated tool-running-executable using the required credentials. This also requires the credentials to be stored for runtime access.

Separation of Services

Creating a separate service which runs with different credentials and is responsible solely for the running of tools.

We went with the separation of services because it is easier to manage, easier for the user to configure, doesn’t require us to store any credentials (Windows takes care of this for us), and it fit our application model better.

Sidenote: we are already looking at alternatives as (spoiler alert) the next major release of Overcee is planned to include Domain Zoning so that different sets of credentials can be configured for the Worker to use in different OUs (to further increase security). Currently we’re planning a hybrid solution, but as always any suggestions can be sent to our twitter @Int64Software or emailed to us at support@int64software.com.

About Overcee’s Job Management Process

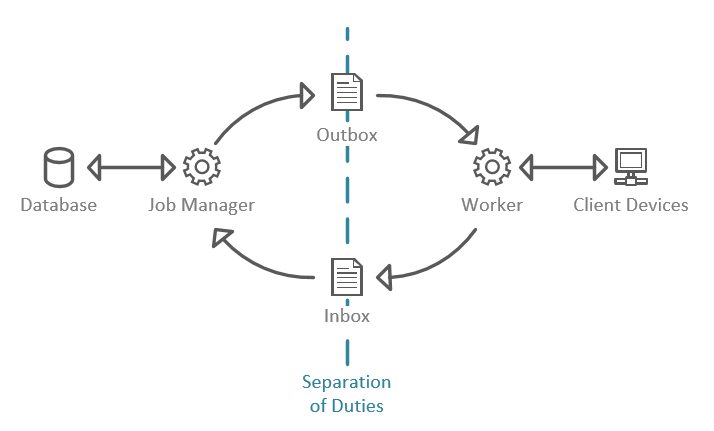

In Overcee, Jobs (created when a Tool or Tool Sequence is set to run on one or more computers) are placed in a queue.

The Job Manager is responsible for de-queuing these jobs for the Worker, and then receiving the results and converting them into the format that the database expects. The Worker receives jobs, fires up the appropriate tool, and then returns the result to the Job Manager.

The Job Manager, therefore, needs to have read/write access to the Overcee database, while the Worker can be safely isolated from it. Why?

Consider a scenario: a user with otherwise minimal permissions to Overcee offers you a “harmless” executable tool he wrote for performing some minor task. He informs you that it is best run from the server and passed a machine name on the command line. So you put the executable in an accessible folder and set up a locked-down Local Execute (execute on the Worker server) tool and make it available to the user.

What you later find out is that this user had an ulterior motive and had figured out the database entries they’d need to change to give themselves full administrator access to Overcee.

But because of the separation of duties (SoD) between the Job Manager and the Worker, even though the executable runs on the server it doesn’t have permission to the database and so can’t make the changes that the user desired.

Now, of course, we’re all more careful than that, and we know that the mere act of running an untrusted executable on the server is opening yourself up to all manner of troubles. But this is just one example of why SoD is important to Overcee.

Also: Reliability

Because of the huge variety of tools we include with Overcee, and because we expect people to make their own with the powerful API, problems are going to happen. And despite our best efforts, sometimes these problems may cause unexpected results in the Worker service. This is another reason why this separation is important: if the Worker needs to be restarted it can be without impacting on any of the other Overcee services. It’s just more robust this way.

So let’s look at the nitty-gritty.

The Job Manager – De-queuing

The Job Manager continuously checks for incoming jobs which have been created by users. These jobs (or rather one computer at one step of a job) are popped off the top of a queue by order of: Status (only Pending or Deferred jobs are de-queued), Priority, Computer Status (computers which still have things to do in the job), and the Tool Sequence step number.

When this happens, the individual computer in this job is allocated a GUID to identify that it is has been de-queued, and the Job Worker then grabs all of the relevant information that the Worker will need (computer info, tool info, tool inputs, etc.) and creates an Outbox data file which the Worker threads monitor.

Once this file has been saved, the Job Manager goes back to looking for incoming jobs or completed job information.

The Worker

When a file appears in the Outbox, a worker thread will pick it up for processing. It should now have all the information it needs to carry out the work, so it loads the appropriate tool, sends it the input information and runs it against the current computer.

Once it receives a result, it writes this to an Inbox file which sends the data back to the Job Manager.

Sidenote: The Worker – Deferral

Some long-running tools will, instead of making the Worker wait for them, return a “Deferred” status and an amount of time to wait before checking in on them again.

When these jobs get returned to the Job Manager, instead of processing this part of the job as Complete or Failed according to the data received, it returns the computer to the job queue with the deferral time so that it will be picked up again once that time has passed.

This allows us to run things like application installs without having to worry about tieing up an entire Worker thread for the duration of the install.

The Job Manager – Storing Results

Finally, when an Inbox file appears, the Job Manager will read it and store the relevant data back in the database, completing the cycle.

Conclusion

So you should now see that the Job Manager and Worker operate in a kind of symbiosis, dependent on each other, yet operating independently on their own tasks. But it’s with this system that we can make sure security and reliability are maintained.

How have you implemented Separation of Duties in your solutions? Tweet us @Int64Software.

Like the article? Share with your friends:

Int64 Software Ltd

Int64 Software Ltd